Random sampling from probability distributions

What about it?

To me this is the most counterintuitive thing I've yet seen from ChatGPT (3.5 for instance, the free version).

Here's the prompt I used on a fresh session:

"Generate 60 random numbers coming from an exponential distribution with lambda=6.4. Don't write any code"

It outputs some numbers:

0.013, 0.078, 0.266, 0.040, 0.117, 0.283, 0.048, 0.078, 0.181, 0.134, 0.017, 0.160, 0.052, 0.196, 0.096, 0.098, 0.019, 0.048, 0.141, 0.071, 0.106, 0.023, 0.197, 0.062, 0.029, 0.072, 0.111, 0.166, 0.080, 0.027, 0.124, 0.016, 0.135, 0.052, 0.078, 0.161, 0.078, 0.139, 0.068, 0.066, 0.039, 0.056, 0.018, 0.082, 0.180, 0.086, 0.024, 0.022, 0.057, 0.042, 0.050, 0.104, 0.099, 0.062, 0.016, 0.186, 0.043, 0.085, 0.145, 0.110.

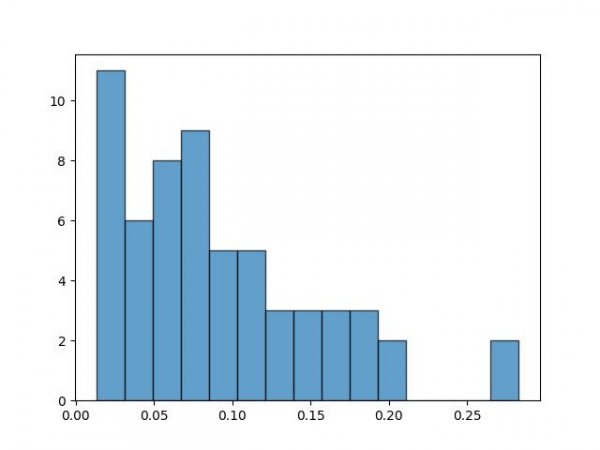

I get to plot a histogram with the numbers and they are close to what I would consider an exponential distribution with such parameter.

Given that GPT 3.5 does not have access to a Python interpreter, how on earth is it able to do so? I have also tried other distributions and parameters and it kind of works. It's not perfect, but with normal distributions it is usually close to what Numpy would generate.

I could understand that it can have learnt to interpret Python code to some extent, but honestly I can't find explanation for random sampling from a probability distribution.

Any thoughts?